[ad_1]

Special thanks to: Robert Sams, Gavin Wood, Mark Karpeles and countless cryptocurrency critics on online forums for helping to develop the thoughts behind this article

If you were to ask the average cryptocurrency or blockchain enthusiast what the key single fundamental advantage of the technology is, there is a high chance that they will give you one particular predictable answer: it does not require trust. Unlike traditional (financial or other) systems, where you need to trust a particular entity to maintain the database of who holds what quantity of funds, who owns a particular internet-of-things-enabled device, or what the status is of a particular financial contract, blockchains allow you to create systems where you can keep track of the answers to those questions without any need to trust anyone at all (at least in theory). Rather than being subject to the whims of any one arbitrary party, someone using a blockchain technology can take comfort in the knowledge that the status of their identity, funds or device ownership is safely and securely maintained in an ultra-secure, trustless distributed ledger Backed By Math™.

Contrasting this, however, there is the standard critique that one might hear on forums like buttcoin: what exactly is this “trust problem” that people are so worried about? Ironically enough, unlike in “crypto land”, where exchanges seem to routinely disappear with millions of dollars in customer funds, sometimes after apparently secretly being insolvent for years, businesses in the real world don’t seem to have any of these problems. Sure, credit card fraud exists, and is a major source of worry at least among Americans, but the total global loss is a mere $190 billion – less than 0.4% of global GDP, compared to the MtGox loss that seems to have cost potentially more than the value of all Bitcoin transactions in that year. At least in the developed world, if you put your money in a bank, it’s safe; even if the bank goes awry, your funds are in most cases protected up to over $100,000 by your national equivalent of the FDIC – even in the case of the Cyprus depositor haircut, everything up to the deposit insurance limit was kept intact. From such a perspective, one can easily see how the traditional “centralized system” is serving people just fine. So what’s the big deal?

Trust

First, it is important to point out that distrust is not nearly the only reason to use blockchains; I mentioned some much more mundane use cases in the previous part of this series, and once you start thinking of the blockchain simply as a database that anyone can read any part of but where each individual user can only write to their own little portion, and where you can also run programs on the data with guaranteed execution, then it becomes quite plausible even for a completely non-ideological mind to see how the blockchain might eventually take its place as a rather mundane and boring technology among the likes of MongoDB, AngularJS and continuation-based web servers – by no means even close to as revolutionary as the internet itself, but still quite powerful. However, many people are interested in blockchains specifically because of their property of “trustlessness”, and so this property is worth discussing.

To start off, let us first try to demystify this rather complicated and awe-inspiring concept of “trust” – and, at the same time, trustlessness as its antonym. What exactly is trust? Dictionaries in this case tend not to give particularly good definitions; for example, if we check Wiktionary, we get:

- Confidence in or reliance on some person or quality: He needs to regain her trust if he is ever going to win her back.

- Dependence upon something in the future; hope.

- Confidence in the future payment for goods or services supplied; credit: I was out of cash, but the landlady let me have it on trust.

There is also the legal definition:

A relationship created at the direction of an individual, in which one or more persons hold the individual’s property subject to certain duties to use and protect it for the benefit of others.

Neither is quite precise or complete enough for our purposes, but they both get us pretty close. If we want a more formal and abstract definition, we can provide one as follows: trust is a model of a particular person or group’s expected behavior, and the adjustment of one’s own behavior in accordance with that model. Trust is a belief that a particular person or group will be affected by a particular set of goals and incentives at a particular time, and the willingness to take actions that rely on that model being correct.

Just from the more standard dictionary definition, one may fall into the trap of thinking that trust is somehow inherently illogical or irrational, and that one should strive hard to trust as little as possible. In reality, however, can see that such thinking is completely fallacious. Everyone has beliefs about everything; in fact, there are a set of theorems which basically state that if you are a perfectly rational agent, you pretty much have to have a probability in your head for every possible claim and update those probabilities according to certain rules. But then if you have a belief, it is irrational not to act on it. If, in your own internal model of the behavior of the individuals in your local geographic area, there is a greater than 0.01% chance that if you leave your door unlocked, someone will steal $10000 worth of goods from your house, and you value the inconvenience of carrying your key around at $1, then you should lock your door and bring the key along when you go to work. But if there is a less than 0.01% chance that someone will come in and steal that much, it is irrational to lock the door.

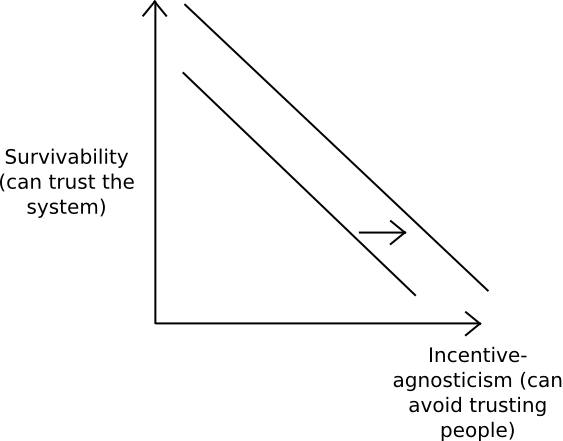

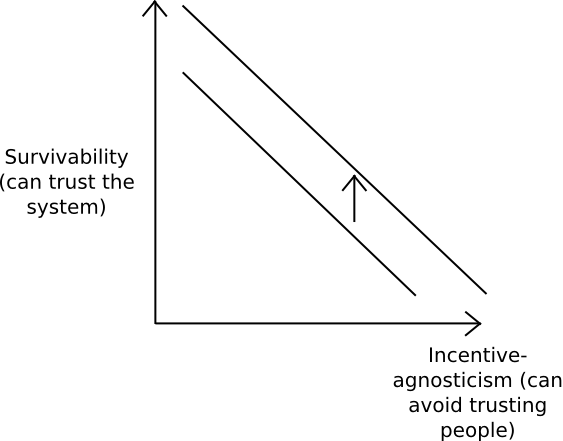

“Trustlessness” in its absolute form does not exist. Given any system that is maintained by humans, there exists a hypothetical combination of motivations and incentives that would lead those humans to successfully collude to screw you over, and so if you trust the system to work you are necessarily trusting the total set of humans not to have that particular combination of motivations and incentives. But that does not mean that trustlessness is not a useful direction to strive in. When a system is claiming to be “trustless”, what it is actually trying to do is expand the possible set of motivations that individuals are allowed to have while still maintaining a particular low probability of failure. When a system is claiming to be “trustful”, it is trying to reduce the probability of failure given a particular set of motivations. Thus, we can see the “trustlessness” and “trustfulness”, at least as directions, are actually the exact same thing:

|

|

Note that in practice the two may be different connotatively: “trustless” systems tend to try harder to improve system trustability given a model where we know little about individuals’ motivations, and “trustful” systems tend to try harder to improve system trustability given a model where we know a lot about individuals’ motivations, and we know that those motivations are with higher probability honest. Both directions are likely worthwhile.

Another important point to note is that trust is not binary, and it is not even scalar. Rather, it is of key importance what it is that you are trusting people to do or not to do. One particular counterintuitive point is that it is quite possible, and often happens, that we trust someone not to do X, but we don’t trust them not to do Y, even though that person doing X is worse for you than them doing Y. You trust thousands of people every day not to suddenly whip a knife out of their pockets as you pass by and stab you to death, but you do not trust complete strangers to hold on to $500 worth of cash. Of course, the reason why is clear: no one has an incentive to jump out at you with a knife, and there is a very strong disincentive, but if someone has your $500 they have a $500 incentive to run away with it, and they can quite easily never get caught (and if they do the penalties aren’t that bad). Sometimes, even if incentives in both cases are similar, such counterintuitive outcomes can come simply because you have nuanced knowledge of someone else’s morality; as a general rule, you can trust that people are good at preventing themselves from doing things which are “obviously wrong”, but morality does quite often fray around the edges where you can convince yourself to extend the envelope of the grey (see Bruce Schneier’s concept of “moral pressures” in Liars and Outliers and Dan Ariely’s The Honest Truth about Dishonesty for more on this).

This particular nuance of trust has direct relevance in finance: although, since the 2008 financial crisis, there has indeed been an upsurge in distrust in the financial system, the distrust that the public feels is not a feeling that there is a high risk that the bank will steal the people’s assets blatantly and directly and overwrite everyone’s bank balance to zero. That is certainly the worst possible thing that they could do to you (aside from the CEO jumping out at you when you enter the bank branch and stabbing you to death), but it is not a likely thing for them to do: it is highly illegal, obviously detectable and will lead to the parties involved going to jail for a long long time – and, just as importantly, it is hard for the bank CEO to convince themselves or their daughter that they are still a morally upright person if they do something like that. Rather, we are afraid that the banks will perform one of many more sneaky and mischievious tricks, like convincing us that a particular financial product has a certain exposure profile but hiding the black swan risks. Even while we are always afraid that large corporations will do things to us that are moderately shady, we are at the same time quite sure that they won’t do anything extremely outright evil – at least most of the time.

So where in today’s world are we missing trust? What is our model of people’s goals and incentives? Who do we rely on but don’t trust, who could we rely on but don’t because we don’t trust them, what exactly is it that we are fearing they would do, and how can decentralized blockchain technology help?

Finance

There are several answers. First, in some cases, as it turns out, the centralized big boys still very much can’t be trusted. In modern financial systems, particularly banks and trading systems, there exists a concept of “settlement” – essentially, a process after a transaction or trade is made the final result of which is that the assets that you bought actually become yours from a legal property-ownership standpoint. After the trade and before settlement, all that you have is a promise that the counterparty will pay – a legally binding promise, but even legal bonds count for nothing when the counterparty is insolvent. If a transaction nets you an expected profit of 0.01%, and you are trading with a company that you estimate has a chance of 1 in 10000 of going insolvent on any particular day, then a single day of settlement time makes all the difference. In international transactions, the same situation applies, except this time the parties actually don’t trust each other’s intentions, as they are in different jurisdictions and some operate in jurisdictions where the law is actually quite weak or even corrupt.

Back in the old days, legal ownership of securities would be defined by ownership of a piece of paper. Now, the ledgers are electronic. But then, who maintains the electronic ledger? And do we trust them? In the financial industry more than anywhere else, the combination of a high ratio of capital-at-stake to expected-return and the high ability to profit from malfeasance means that trust risks are greater than perhaps almost any other legal white-market industry. Hence, can decentralized trustworthy computing platforms – and very specifically, politically decentralized trustworthy computing platforms, save the day?

According to quite a few people, yes they can. However, in these cases, commentators such as Tim Swanson have pointed out a potential flaw with the “fully open” PoW/PoS approach: it is a little too open. In part, there may be regulatory issues with having a settlement system based on a completely anonymous set of consensus participants; more importantly, however, restricting the system can actually reduce the probability that the participants will collude and the system will break. Who would you really trust more: a collection of 31 well-vetted banks that are clearly separate entities, located in different countries, not owned by the same investing conglomerates, and are legally accountable if they collude to screw you over, or a group of mining firms of unknown quantity and size with no real-world reputations, 90% of whose chips may be produced in Taiwan or Shenzhen? For mainstream securities settlement, the answer that most people in the world would give seems rather clear. But then, in ten years’ time, if the set of miners or the set of anonymous stakeholders of some particular currency proves itself trustworthy, eventually banks may warm up to even the more “pure cryptoanarchic” model – or they may not.

Interaction and Common Knowledge

Another important point is that even if each of us has some set of entities that we trust, not all of us have the same set of entities. IBM is perfectly fine trusting IBM, but IBM would likely not want its own critical infrastructure to be running on top of Google’s cloud. Even more pertinently, neither IBM nor Google may be interested in having their critical infrastructure running on top of Tencent’s cloud, and potentially increasing their exposure to the Chinese government (and likewise, especially following the recent NSA scandals, there has been increasing interest in keeping one’s data outside the US, although this must be mentioned with the caveat that much of the concern is about privacy, not protection against active interference, and blockchains are much more useful at providing the latter than the former).

So, what if IBM and Tencent want to build applications that interact with each other heavily? One option is to simply call each other’s services via JSON-RPC, or some similar framework, but as a programming environment this is somewhat limited; every program must either live in IBM land, and take 500 milliseconds round-trip to send a request to Tencent, or live in Tencent land, and take 500 milliseconds to send a request to IBM. Reliability also necessarily drops below 100%. One solution that may be useful in some cases is to simply have both pieces of code living on the same execution environment, even if each piece has a different administrator – but then, the shared execution environment needs to be trusted by both parties. Blockchains seem like a perfect solution, at least for some use cases. The largest benefits may come when there is a need for a very large number of users to interact; when it’s just IBM and Tencent, they can easily make some kind of tailored bilateral system, but when N companies are interacting with each other, you would need either N2 bilateral systems among every pair of companies, or you can more simply make a single shared system for everyone – and that system might as well be called a blockchain.

Trust for the Rest of Us

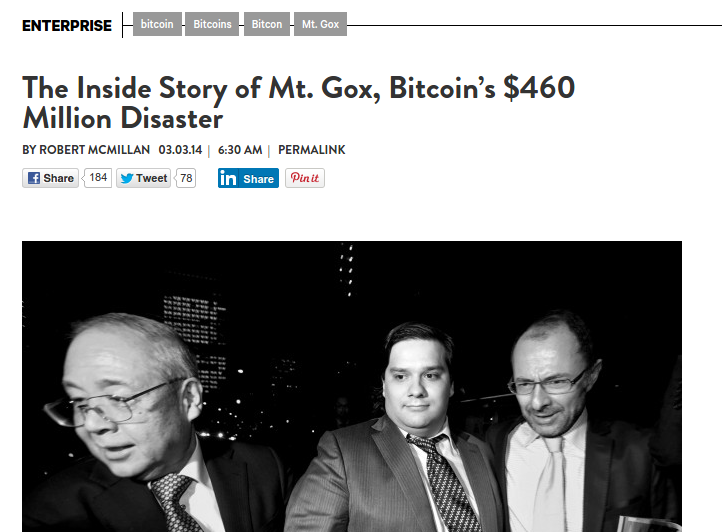

The second case for decentralization is more subtle. Rather than concentrating on the lack of trust, here we emphasize the barrier to entry in becoming a locus of trust. Sure, billion dollar companies can certainly become loci of trust just fine, and indeed it is the case that they generally work pretty well – with a few important exceptions that we will discuss later on. However, their ability to do so comes at a high cost. Although the fact that so many Bitcoin businesses have managed to abscond with their customers’ funds is sometimes perceived as a strike against the decentralized economy, it is in fact something quite different: it is a strike against a economy with low social capital. It shows that the high degree of trust that mainstream institutions have today is not something that simply arose because powerful people are especially nice and tech geeks are less nice; rather, it is the result of centuries of social capital built up over a process which would take many decades and many trillions of dollars of investment to replicate. Quite often, the institutions only play nice because they are regulated by governments – and the regulation itself is in turn not without large secondary costs. Without that buildup of social capital, well, we simply have this:

And lest you think that such incidents are a unique feature of “cryptoland”, back in the real world we also have this:

|

|

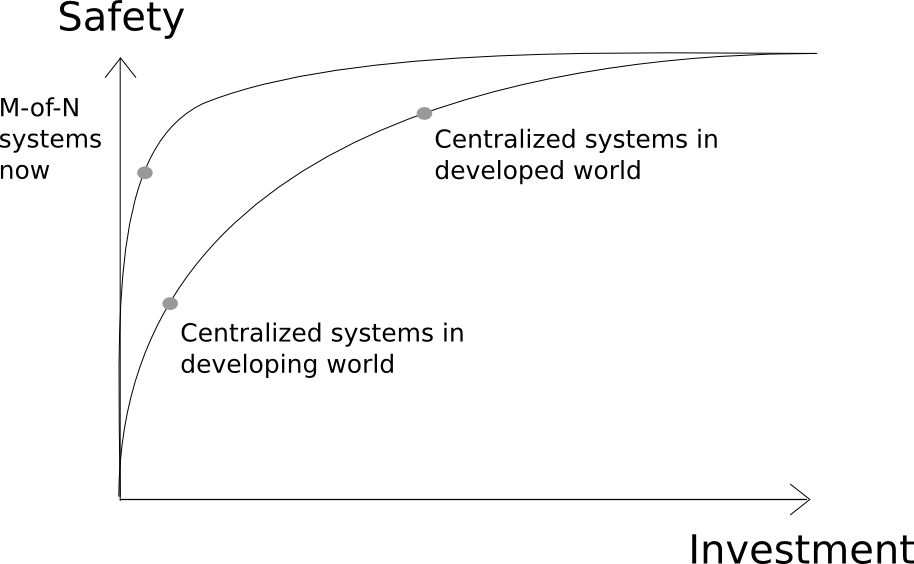

The key promise of decentralized technology, under this viewpoint, is not to create systems that are even more trustworthy than current large institutions; if one simply looks at basic statistics in the developed world, one can see that many such systems can quite reasonably be described as being “trustworthy enough”, in that their annual rate of failure is sufficiently low that other factors dominate in the choice of which platform to use. Rather, the key promise of decentralized technology is to provide a shortcut to let future application developers get there faster:

Traditionally, creating a service that holds critical customer data or large quantities of customer funds has involved a very high degree of trust, and therefore a very large degree of effort – some of it involving complying with regulations, some convincing an established partner to lend you their brand name, some buying extremely expensive suits and renting fake “virtual office space” in the heart of downtown New York or Tokyo, and some simply being an established company that has served customers well for decades. If you want to be entrusted with millions, well, better be prepared to spend millions.

With blockchain technology, however, the exact opposite is potentially the case. A 5-of-8 multisig consisting of a set of random individuals around the world may well have a lower probability of failure than all but the largest of institutions – and at a millionth of the cost. Blockchain-based applications allow developers to prove that they are honest – by setting up a system where they do not even have any more power than the users do. If a group of largely 20-to-25-year old college dropouts were to announce that they were opening a new prediction market, and asked people to deposit millions of dollars to them via bank deposit, they would likely be rightfully viewed with suspicion. With blockchain technology, on the other hand, they can release Augur as a decentralized application, and they can assure the whole world that their ability to run away with everyone’s funds is drastically reduced. Particularly, imagine what would be the case if this particular group of people was based in India, Afghanistan or, heck, Nigeria. If they were not a decentralized application, they would likely not have been able to get anyone’s trust at all. Even in the developed world, the less effort you need to spend convincing users that you are trustworthy, the more you are free to work on developing your actual product.

Subtler Subterfuge

Finally, of course, we can get back to the large corporations. It is indeed a truth, in our modern age, that large companies are increasingly distrusted – they are increasingly distrusted by regulators, they are increasingly distrusted by the public, and they are increasingly distrusted by each other. But, at least in the developed world, it seems obvious that they are not going to go around zeroing out people’s balances or causing their devices to fail in arbitrarily bad ways for the fun of it. So if we distrust these behemoths, what is it that we are afraid they will do? Trust, as discussed above, isn’t a boolean or a scalar, it’s a model of someone else’s projected behavior. So what are the likely failure modes in our model?

The answer generally comes from the concept of base-layer services, as outlined in the previous part of this series. There are certain kinds of services which happen to have the property that they (1) end up having other services depending on them, (2) have high switching costs, and (3) have high network effects, and in these cases, if a private company operating a centralized service creates a monopoly they have substantial latitude over what they can do to protect their own interests and establish a permanent position for themselves at the center of society – at the expense of everyone else. The latest incident that shows the danger came one week ago, when Twitter cut video streaming service Meerkat off of its social network API. Meerkat’s offense: allowing users to very easily import their social connections from Twitter.

When a service becomes a monopoly, it has the incentive to keep that monopoly. Whether that entails disrupting the survival of companies that try to build on the platform in a way that competes with its offerings, or restricting access to users’ data inside the system, or making it easy to come in but hard to move away, there are plenty of opportunities to slowly and subtly chip away at users’ freedoms. And we increasingly do not trust companies not to do that. Building on blockchain infrastructure, on the other hand, is a way for an application developer to commit not to be a jerk, forever.

… And Laziness

In some cases, there is also another concern: what if a particular service shuts down? The canonical example here is the various incarnations of “RemindMe” services, which you can ask to send you a particular message at some point in the future – perhaps in a week, perhaps in a month, and perhaps in 25 years. In the 25-year case (and realistically even the 5-year case), however, all currently existing services of that kind are pretty much useless for a rather obvious reason: there is no guarantee that the company operating the service will continue to exist in 5 years, much less 25. Not trusting people not to disappear is a no-brainer; for someone to disappear, they do not even have to be actively malicious – they just have to be lazy.

This is a serious problem on the internet, where 49% of documents cited in court cases are no longer accessible because the servers on which the pages were located are no longer online, and to that end projects like IPFS are trying to resolve the problem via a politically decentralized content storage network: instead of referring to a file by the name of the entity that controls it (which an address like “https://blog.ethereum.org/2015/04/13/visions-part-1-the-value-of-blockchain-technology/” basically does), we refer to the file by the hash of the file, and when a user asks for the file any node on the network can provide it – in the project’s own words, creating “the permanent web”. Blockchains are the permanent web for software daemons.

This is particularly relevant in the internet of things space; in a recent IBM report, one of their major concerns with the default choice for internet of things infrastructure, a centralized “cloud”, that they cite is as follows:

While many companies are quick to enter the market for smart, connected devices, they have yet to discover that it is very hard to exit. While consumers replace smartphones and PCs every 18 to 36 months, the expectation is for door locks, LED bulbs and other basic pieces of infrastructure to last for years, even decades, without needing replacement … In the IoT world, the cost of software updates and fixes in products long obsolete and discontinued will weigh on the balance sheets of corporations for decades, often even beyond manufacturer obsolescence.

From the manufacturer’s point of view, having to maintain servers to deal with remaining instances of obsolete products is an annoying expense and a chore. From the consumer’s point of view, there is always the nagging fear: what if the manufacturer simply shrugs off this responsibility, and disappears without bothering to maintain continuity? Having fully autonomous devices managing themselves using blockchain infrastructure seems like a decent way out.

Conclusion

Trust is a complicated thing. We all want, at least to some degree, to be able to live without it, and be confident that we will be able to achieve our goals without having to take the risk of someone else’s bad behavior – much like every farmer would love to have their crops blossom without having to worry about the weather and the sun. But economy requires cooperation, and cooperation requires dealing with people. However, impossibility of an ultimate end does not imply futility of the direction, and in any case it is always a worthwhile task to, whatever our model is, figure out how to reduce the probability that our systems will fail.

Decentralization of the kind described here is not prevalent in the physical world primarily because the duplication costs involved are expensive, and consensus is hard: you don’t want to have to go to five of eight government offices in order to get your passport issued, and organizations where every decision is made by a large executive board tend to decrease quickly in efficiency. In cryptoland, however, we get to benefit from forty years of rapid development of low-cost computer hardware capable of executing billions of processing cycles per second in silicon – and so, it is rational to at least explore the hypothesis that the optimal tradeoffs should be different. This is in some ways the decentralized software industry’s ultimate bet – now let’s go ahead and see how far we can take it.

The next part of the series will discuss the future of blockchain technology from a technical perspective, and show what decentralized computation and transaction processing platforms may look like in ten years’ time.

[ad_2]

Source link

Bitcoin

Bitcoin  Tether

Tether  XRP

XRP  USDC

USDC  Lido Staked Ether

Lido Staked Ether  Dogecoin

Dogecoin  LEO Token

LEO Token