[ad_1]

T'was the day before genesis, when all was prepared, geth was in sync, my beacon node paired. Firewalls configured, VLANs galore, hours of preparation meant nothing ignored.

Then all at once everything went awry, the SSD in my system decided to die. My configs were gone, chain data was history, nothing to do but trust in next day delivery.

I found myself designing backups and redundancies. Complicated systems consumed my fantasies. Thinking further I came to realise: worrying about these kinds of failures was quite unwise.

Events

The beacon chain has several mechanisms to incentivise validator behaviour, all of which are dependant on the current status of the network, so it is vital to consider these failure cases in the greater context of how other validators might fail when deciding what are, and what aren’t, worthwhile ways of securing your node(s).

As an active validator, your balance either increases or decreases, it never goes sideways*. Therefore a pretty reasonable way of maximising your profits, is to minimise your downsides. There are 3 ways your balance can be reduced by the beacon chain:

- Penalties are issued when your validator misses one of their duties (e.g. because they are offline)

- Inactivity Leaks are handed out to validators that miss their duties while the network is failing to finalise (i.e. when your validator being offline is highly correlated with other validators being offline)

- Slashings are given to validators who produce blocks or attestations that are contradictory and therefore could be used in an attack

* On average, a validator’s balance may stay the same, but for any given duty, they are either rewarded or punished.

Correlation

The effect of a single validator being offline or performing slashable behaviour is small in terms of the overall health of the beacon chain. It is therefore not punished heavily. In contrast, if many validators are offline, the balance of offline validators can decrease much more rapidly.

Similarly, if many validators perform slashable actions at the same time, from the beacon chain’s perspective, this is indistinguishable from an attack. It is therefore treated as such, and 100% of the offending validators’ stake is burned.

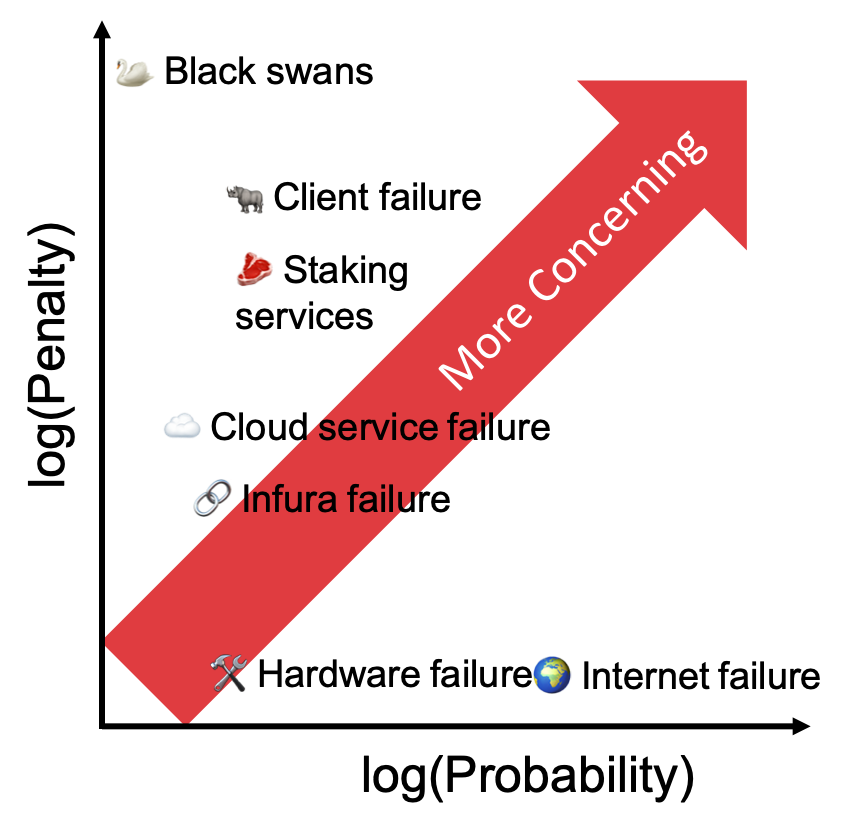

Because of these “anti-correlation” incentives, validators should worry more about failures that might affect others at the same time rather than isolated, individual issues.

Causes and their probability.

So let’s think through some failure cases and examine them through the lens of how many others would be affected at the same time, and how badly your validators would be punished.

I disagree with @econoar here that these are worst case issues. These are more moderate level issues. Home UPS and Dual WAN address failures aren’t correlated with other users and so should be far down your list of concerns.

🌍 Internet/power failure

If you are validating from home, then it’s highly likely you’ll encounter one of these failures at some point in the future. Residential internet and power connections do not have guaranteed uptime. However, when the internet does go down, or your power is out, the outage is usually limited to your area and even then only for a few hours.

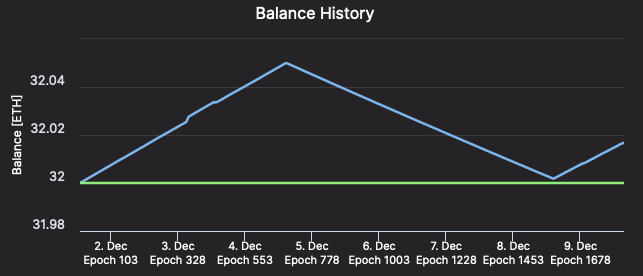

Unless you have very spotty internet/power, it might not be worthwhile paying for fall-over connections. You’ll receive a few hours of penalties, but as the rest of the network is running normally, your penalties will be roughly equal to what your rewards would have been over the same period. In other words, a k hour-long failure sets your validator’s balance back to roughly where it was k hours before the failure, and in k additional hours your validator’s balance will be back to its pre-failure amount.

[Validator #12661 regaining ETH as quickly as it was lost – Beaconcha.in

🛠 Hardware failure

Like internet failure, hardware failure strikes randomly, and when it does, your node might be down for a few days. It is valuable to consider the expected rewards over the lifetime of the validator versus the cost of redundant hardware. Is the expected value of the failure (the offline penalties times the chance of it happening) greater than the cost of the redundant hardware?

Personally, the chance of failure is low enough and the cost of fully redundant hardware high enough, that it almost certainly isn’t worth it. But then again, I am not a whale 🐳 ; as with any failure scenario, you need to evaluate how this applies to your particular situation.

☁️ Cloud services failure

Maybe, to avoid the risks of hardware or internet failure altogether, you decide to go with a cloud provider. With a cloud provider, you have introduced the risk of correlated failures. The question that matters is, how many other validators are using the same cloud provider as you?

A week before genesis, Amazon AWS had a prolonged outage which affected a large portion of the web. If something similar were to happen now, enough validators would go offline at the same time that the inactivity penalties would kick in.

Even worse, if a cloud provider were to duplicate the VM running your node and accidentally leave the old and the new node running at the same time, you could be slashed (the penalties incurred would be especially bad if this accidental duplication affected many other nodes too).

If you are insistent on relying on a cloud provider, consider switching to a smaller provider. It may end up saving you a lot of ETH.

🥩 Staking Services

There are several staking services on mainnet today with varying degrees of decentralisation, but they all contain an increased risk of correlated failures if you trust them with your ETH. These services are necessary components of the eth2 ecosystem, especially for those with less than 32 ETH or without the technical know-how to stake, but they are architected by humans and therefore imperfect.

If staking pools eventually grow to be as large as eth1 mining pools, then it is conceivable that a bug could cause mass slashings or inactivity penalties for their members.

🔗 Infura Failure

Last month Infura went down for 6 hours causing outages across the Ethereum ecosystem; it is easy to see how this is likely to result in correlated failures for eth2 validators.

In addition, 3rd party eth1 API providers necessarily rate-limit calls to their service: In the past this has caused validators to be unable to produce valid blocks (on the Medalla testnet).

The best solution is to run your own eth1 node: you won’t encounter rate-limiting, it will reduce the likelihood of your failures being correlated, and it will improve the decentralisation of the network as a whole.

Eth2 clients have also started adding the possibility of specifying multiple eth1 nodes. This makes it easy to switch to a backup endpoint, in the event your primary endpoint fails (Lighthouse: –eth1-endpoints, Prysm: PR#8062, Nimbus & Teku will likely add support somewhere in the future).

I highly recommend adding backup API options as cheap/free insurance (EthereumNodes.com shows the free and paid API endpoints and their current status). This is useful whether you are running your own eth1 node or not.

🦏 Failure of a particular eth2 client

Despite all the code review, audits, and rockstar work, all of the eth2 clients have bugs hiding somewhere. Most of them are minor and will be caught before they present a major problem in production, but there is always the chance that the client you choose will go offline or cause you to be slashed. If this were to happen, you would not want to be running a client with > 1/3 of the nodes on the network.

You must strike a tradeoff between what you deem to be the best client vs how popular that client is. Consider reading through the documentation of another client so that if something happens to your node, you know what to expect in terms of installing and configuring a different client.

If you have lots of ETH at stake, it is probably worth running multiple clients each with some of your ETH to avoid putting all your eggs in one basket. Otherwise, Vouch is an interesting offering for multi-node staking infrastructure, and Secret Shared Validators are seeing rapid development.

🦢 Black swans

There are of course many unlikely, unpredictable, yet dangerous scenarios that will always present a risk. Scenarios that lie outside the obvious decisions about your staking set-up. Examples such as Spectre and Meltdown at the hardware level, or kernel bugs such as BleedingTooth hint at some of the hazards that exist across the entire hardware stack. By definition, it is not possible to entirely predict and avoid these problems, instead you generally must react after the fact.

What to worry about

Ultimately this comes down to calculating the expected value E(X) of a given failure: how likely an event is to happen, and what the penalties would be if it did. It is vital to consider these failures in the context of the rest of the eth2 network since the correlation greatly affects the penalties at hand. Comparing the expected cost of a failure to the cost of mitigating it will give you the rational answer as to whether it is worth getting in front of.

No one knows all the ways a node can fail, nor how likely each failure is, but by making individual estimates of the chances of each failure type and mitigating the biggest risks, the “wisdom of the crowd” will prevail and on average the network as a whole will make a good estimate. Furthermore, because of the different risks each validator faces, and the differing estimates of those risks, the failures you did not account for will be caught by others and therefore the degree of correlation will be reduced. Yay decentralisation!

📕 DON’T PANIC

Finally, if something does happen to your node, don’t panic! Even during inactivity leaks, penalties are small on short time scales. Take a few moments to think through what happened and why. Then make a plan of action to fix the problem. Then take a deep breath before you proceed. An extra 5 minutes of penalties is preferable to being slashed because you did something ill-advised in a rush.

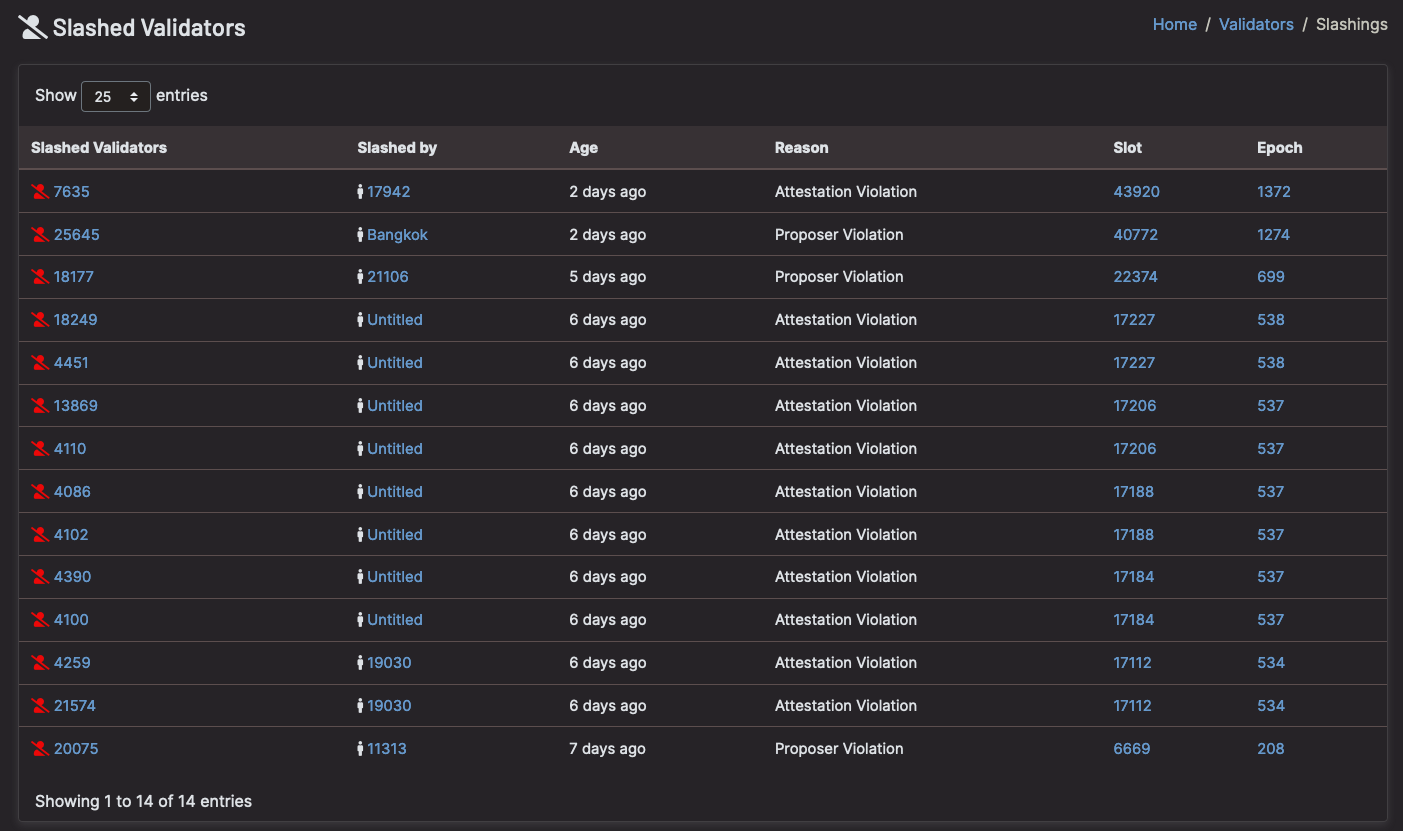

Most of all: 🚨 Do not run 2 nodes with the same validator keys! 🚨

Thanks Danny Ryan, Joseph Schweitzer, and Sacha Yves Saint-Leger for review

[Slashings because validators ran >1 node – Beaconcha.in]

[ad_2]

Source link

Bitcoin

Bitcoin  Tether

Tether  XRP

XRP  USDC

USDC  Lido Staked Ether

Lido Staked Ether  Dogecoin

Dogecoin  LEO Token

LEO Token