[ad_1]

*This is part #1 of a series where anyone can ask questions about Geth and I’ll attempt to answer the highest voted one each week with a mini writeup. This week’s highest voted question was: Could you share how the flat db structure is different from the legacy structure?*

State in Ethereum

Before diving into an acceleration structure, let’s recap a bit what Ethereum calls state and how it is stored currently at its various levels of abstraction.

Ethereum maintains two different types of state: the set of accounts; and a set of storage slots for each contract account. From a purely abstract perspective, both of these are simple key/value mappings. The set of accounts maps addresses to their nonce, balance, etc. A storage area of a single contract maps arbitrary keys – defined and used by the contract – to arbitrary values.

Unfortunately, whilst storing these key-value pairs as flat data would be very efficient, verifying their correctness becomes computationally intractable. Every time a modification would be made, we’d need to hash all that data from scratch.

Instead of hashing the entire dataset all the time, we could split it up into small contiguous chunks and build a tree on top! The original useful data would be in the leaves, and each internal node would be a hash of everything below it. This would allow us to only recalculate a logarithmic number of hashes when something is modified. This data structure actually has a name, it’s the famous Merkle tree.

Unfortunately, we still fall a bit short on the computational complexity. The above Merkle tree layout is very efficient at incorporating modifications to existing data, but insertions and deletions shift the chunk boundaries and invalidate all the calculated hashes.

Instead of blindly chunking up the dataset, we could use the keys themselves to organize the data into a tree format based on common prefixes! This way an insertion or deletion wouldn’t shift all nodes, rather will change just the logarithmic path from root to leaf. This data structure is called a Patricia tree.

Combine the two ideas – the tree layout of a Patricia tree and the hashing algorithm of a Merkle tree – and you end up with a Merkle Patricia tree, the actual data structure used to represent state in Ethereum. Guaranteed logarithmic complexity for modifications, insertions, deletions and verification! A tiny extra is that keys are hashed before insertion to balance the tries.

State storage in Ethereum

The above description explains why Ethereum stores its state in a Merkle Patricia tree. Alas, as fast as the desired operations got, every choice is a trade-off. The cost of logarithmic updates and logarithmic verification is logarithmic reads and logarithmic storage for every individual key. This is because every internal trie node needs to be saved to disk individually.

I do not have an accurate number for the depth of the account trie at the moment, but about a year ago we were saturating the depth of 7. This means, that every trie operation (e.g. read balance, write nonce) touches at least 7-8 internal nodes, thus will do at least 7-8 persistent database accesses. LevelDB also organizes its data into a maximum of 7 levels, so there’s an extra multiplier from there. The net result is that a single state access is expected to amplify into 25-50 random disk accesses. Multiply this with all the state reads and writes that all the transactions in a block touch and you get to a scary number.

[Of course all client implementations try their best to minimize this overhead. Geth uses large memory areas for caching trie nodes; and also uses in-memory pruning to avoid writing to disk nodes that get deleted anyway after a few blocks. That’s for a different blog post however.]

As horrible as these numbers are, these are the costs of operating an Ethereum node and having the capability of cryptograhically verifying all state at all times. But can we do better?

Not all accesses are created equal

Ethereum relies on cryptographic proofs for its state. There is no way around the disk amplifications if we want to retain our capability to verify all the data. That said, we can – and do – trust the data we’ve already verified.

There is no point to verify and re-verify every state item, every time we pull it up from disk. The Merkle Patricia tree is essential for writes, but it’s an overhead for reads. We cannot get rid of it, and we cannot slim it down; but that doesn’t mean we must necessarily use it everywhere.

An Ethereum node accesses state in a few different places:

- When importing a new block, EVM code execution does a more-or-less balanced number of state reads and writes. A denial-of-service block might however do significantly more reads than writes.

- When a node operator retrieves state (e.g. eth_call and family), EVM code execution only does reads (it can write too, but those get discarded at the end and are not persisted).

- When a node is synchronizing, it is requesting state from remote nodes that need to dig it up and serve it over the network.

Based on the above access patterns, if we can short circuit reads not to hit the state trie, a slew of node operations will become significantly faster. It might even enable some novel access patterns (like state iteration) which was prohibitively expensive before.

Of course, there’s always a trade-off. Without getting rid of the trie, any new acceleration structure is extra overhead. The question is whether the additional overhead provides enough value to warrant it?

Back to the roots

We’ve built this magical Merkle Patricia tree to solve all our problems, and now we want to get around it for reads. What acceleration structure should we use to make reads fast again? Well, if we don’t need the trie, we don’t need any of the complexity introduced. We can go all the way back to the origins.

As mentioned in the beginning of this post, the theoretical ideal data storage for Ethereum’s state is a simple key-value store (separate for accounts and each contract). Without the constraints of the Merkle Patricia tree however, there’s “nothing” stopping us from actually implementing the ideal solution!

A while back Geth introduced its snapshot acceleration structure (not enabled by default). A snapshot is a complete view of the Ethereum state at a given block. Abstract implementation wise, it is a dump of all accounts and storage slots, represented by a flat key-value store.

Whenever we wish to access an account or storage slot, we only pay 1 LevelDB lookup instead of 7-8 as per the trie. Updating the snapshot is also simple in theory, after processing a block we do 1 extra LevelDB write per updated slot.

The snapshot essentially reduces reads from O(log n) to O(1) (times LevelDB overhead) at the cost of increasing writes from O(log n) to O(1 + log n) (times LevelDB overhead) and increasing disk storage from O(n log n) to O(n + n log n).

Devil’s in the details

Maintaining a usable snapshot of the Ethereum state has its complexity. As long as blocks are coming one after the other, always building on top of the last, the naive approach of merging changes into the snapshot works. If there’s a mini reorg however (even a single block), we’re in trouble, because there’s no undo. Persistent writes are one-way operation for a flat data representation. To make matters worse, accessing older state (e.g. 3 blocks old for some DApp or 64+ for fast/snap sync) is impossible.

To overcome this limitation, Geth’s snapshot is composed of two entities: a persistent disk layer that is a complete snapshot of an older block (e.g. HEAD-128); and a tree of in-memory diff layers that gather the writes on top.

Whenever a new block is processed, we do not merge the writes directly into the disk layer, rather just create a new in-memory diff layer with the changes. If enough in-memory diff layers are piled on top, the bottom ones start getting merged together and eventually pushed to disk. Whenever a state item is to be read, we start at the topmost diff layer and keep going backwards until we find it or reach the disk.

This data representation is very powerful as it solves a lot of issues. Since the in-memory diff layers are assembled into a tree, reorgs shallower than 128 blocks can simply pick the diff layer belonging to the parent block and build forward from there. DApps and remote syncers needing older state have access to 128 recent ones. The cost does increase by 128 map lookups, but 128 in-memory lookups is orders of magnitude faster than 8 disk reads amplified 4x-5x by LevelDB.

Of course, there are lots and lots of gotchas and caveats. Without going into too much details, a quick listing of the finer points are:

- Self-destructs (and deletions) are special beasts as they need to short circuit diff layer descent.

- If there is a reorg deeper than the persistent disk layer, the snapshot needs to be completely discarded and regenerated. This is very expensive.

- On shutdown, the in-memory diff layers need to be persisted into a journal and loaded back up, otherwise the snapshot will become useless on restart.

- Use the bottom-most diff layer as an accumulator and only flush to disk when it exceeds some memory usage. This allows deduping writes for the same slots across blocks.

- Allocate a read cache for the disk layer so that contracts accessing the same ancient slot over and over don’t cause disk hits.

- Use cumulative bloom filters in the in-memory diff layers to quickly detect whether there’s a chance for an item to be in the diffs, or if we can go to disk immediately.

- The keys are not the raw data (account address, storage key), rather the hashes of these, ensuring the snapshot has the same iteration order as the Merkle Patricia tree.

- Generating the persistent disk layer takes significantly more time than the pruning window for the state tries, so even the generator needs to dynamically follow the chain.

The good, the bad, the ugly

Geth’s snapshot acceleration structure reduces state read complexity by about an order of magnitude. This means read-based DoS gets an order of magnitude harder to pull off; and eth_call invocations get an order of magnitude faster (if not CPU bound).

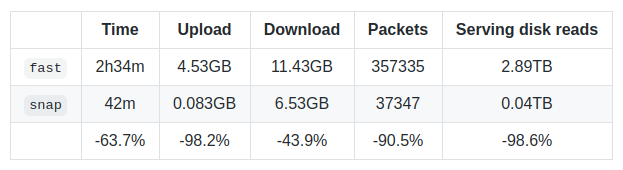

The snapshot also enables blazing fast state iteration of the most recent blocks. This was actually the main reason for building snapshots, as it permitted the creation of the new snap sync algorithm. Describing that is an entirely new blog post, but the latest benchmarks on Rinkeby speak volumes:

Of course, the trade-offs are always present. After initial sync is complete, it takes about 9-10h on mainnet to construct the initial snapshot (it’s maintained live afterwards) and it takes about 15+GB of additional disk space.

As for the ugly part? Well, it took us over 6 months to feel confident enough about the snapshot to ship it, but even now it’s behind the –snapshot flag and there’s still tuning and polishing to be done around memory usage and crash recovery.

All in all, we’re very proud of this improvement. It was an insane amount of work and it was a huge shot in the dark implementing everything and hoping it will work out. Just as a fun fact, the first version of snap sync (leaf sync) was written 2.5 years ago and was blocked ever since because we lacked the necessary acceleration to saturate it.

Epilogue

Hope you enjoyed this first post of Ask about Geth. It took me about twice as much to finish it than I aimed for, but I felt the topic deserves the extra time. See you next week.

[PS: I deliberately didn’t link the asking/voting website into this post as I’m sure it’s a temporary thing and I don’t want to leave broken links for posterity; nor have someone buy the name and host something malicious in the future. You can find it among my Twitter posts.]

[ad_2]

Source link

Bitcoin

Bitcoin  Tether

Tether  XRP

XRP  USDC

USDC  Lido Staked Ether

Lido Staked Ether  Dogecoin

Dogecoin  LEO Token

LEO Token